| |

Laboratory of Information Photonics,

Department of Information and Physical Sciences,

Graduate School of Information Science and Technology, Osaka University |

|

Superposition imaging for extended depth-of-field and field-of-view

Top (Computational imaging)

Compound-eye camera

Compressive imaging

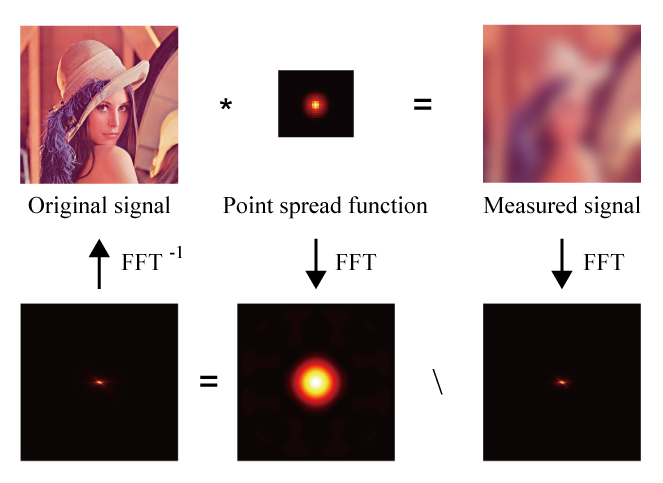

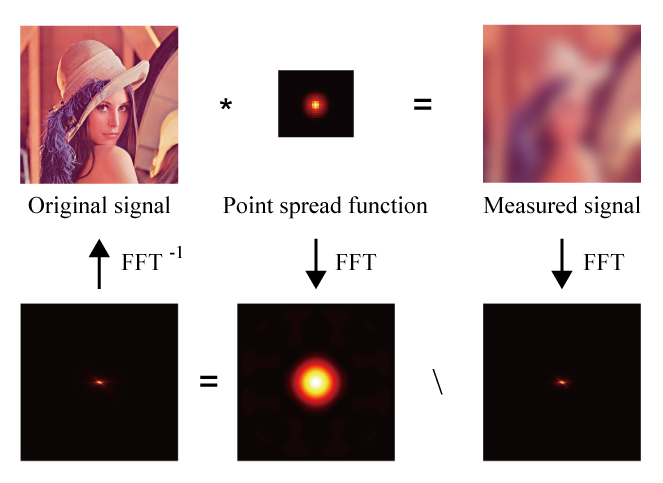

Fig. 1. Image restration by deconvolution filtering |

|

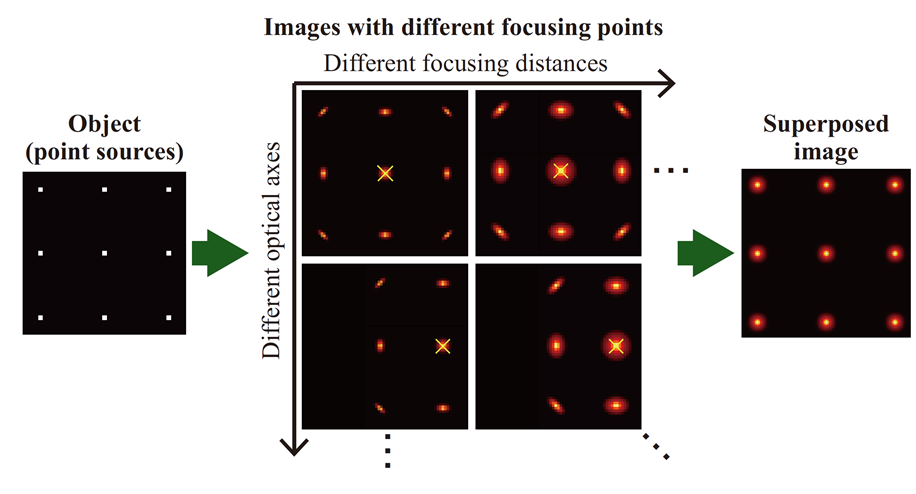

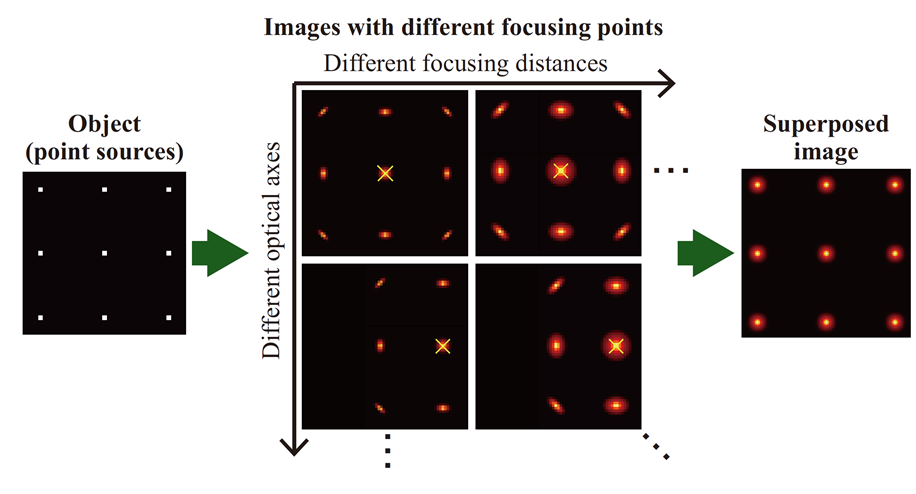

Fig. 2. Three-dimensional equalization of point spread function by superposition |

Depth-of-field (DOF) and field-of-view (FOV) of imaging systems are restricted by aberrations. On the other hand, an image blurred by the aberrations can be processed to a sharp image by deconvolution filtering if the point spread function (PSF) is space-invariant as shown in Fig. 1 [1]. In our proposed method, the three-dimensional variation of PSF is equalized by superposition of the images with different focusing distances and optical axes, and a sharp image of three-dimensional objects can be reconstructed by deconvolution with a single filter as described in Fig. 2 [2]. With this scheme, we can realize wide-FOV and deep-DOF imaging systems without loss of light energy by a small aperture stop or complicated lens design. | |

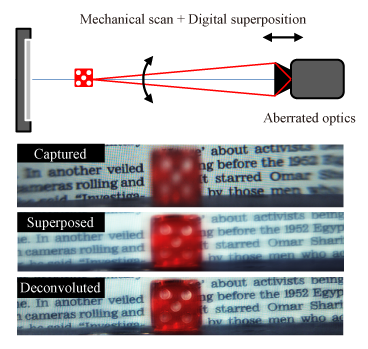

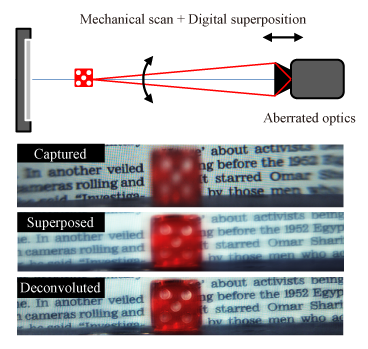

Fig. 3. Superposition imaging with mechanical scanning of optics |

|

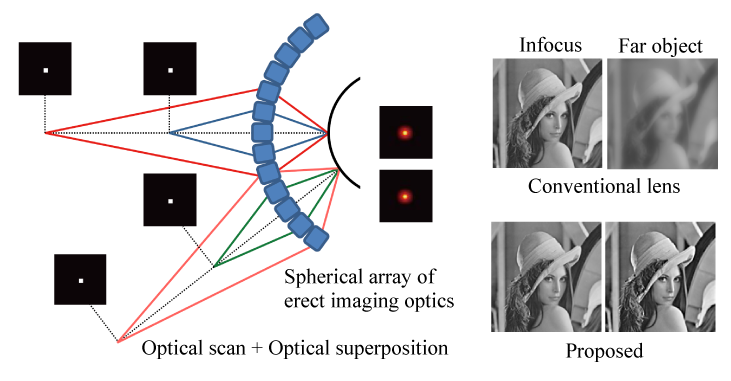

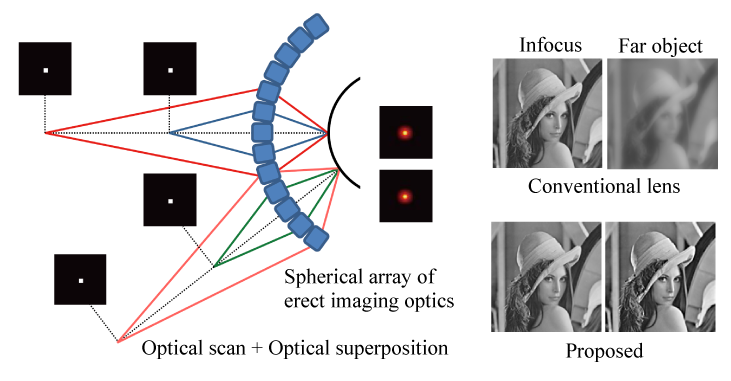

Fig. 4. Superposition imaging with compound-eye optics |

|

Two implementation methods of the superposition imaging have been demonstrated. In the mechanical scanning method, the images with different focusing points are collected by the mechanical scanning of optics containing aberrations and superposed computationally as shown in Fig. 3 [3]. In this implementation, we can acquire a sharp image of three-dimensional objects with aberrated optics, and prior information of objects (e.g. three-dimensional position, shape, and so on) are not required. In the optical scanning method with a superposition compound eye, the erect imaging optics array with spherical arrangement and/or distortion of erect lenses scans the focusing points in object space and superposes the images optically as shown in Fig. 4 [4]. This implementation can realize the superposition process by single-shot imaging. The FOV can be extended to the omni-direction due to the monocentric optics design.

Superposition imaging can also be applied to incoherent projectors. In this case, the PSF equalization is realized by the superposition of projected images, and the deconvolution process is operated before the projection. This scheme realizes an incoherent projector with extended DOF and FOV [5].

|

- J. W. Goodman, Introduction to Fourier Optics (McGraw-Hill, 1996).

- R. Horisaki, T. Nakamura, and J. Tanida, “Superposition imaging for three-dimensionally space-invariant point spread functions,” Appl. Phys. Express 4, p. 112501 (2011).

- T. Nakamura, R. Horisaki, and J. Tanida, “Experimental verification of computational superposition imaging for compensating defocus and off-axis aberrated images,” in Proc. of Computational Optical Sensing and Imaging (COSI), CM2B.4, June 2012 (California).

- T. Nakamura, R. Horisaki, and J. Tanida, “Computational superposition compound eye imaging for extended depth-of-field and field-of-view,” Opt. Express 20, pp. 27482-27495 (2012).

- T. Nakamura, R. Horisaki, and J. Tanida, “Computational superposition projector for extended depth of field and field of view,” Opt. Lett. 38, pp. 1560-1562 (2013).

|

|

|

|